Are Discrete Diffusion Models Better Than Auto-regressive Models in Text Generation? Uncovering a Hidden Numerical Issue

With SEDD winning the Best Paper Award at ICML 2024, discrete diffusion models have emerged as a promising contender to auto-regressive models in text generation. In this blog, however, we uncover a hidden yet critical numerical precision issue that negatively impacts generation diversity in discrete diffusion sampling. This flaw highlights the limitations of previous evaluations, which rely solely on the incomplete metric of generative perplexity, resulting in a secretely unfair comparison to auto-regressive models. For complete analyses and proofs, please refer to our paper (http://arxiv.org/pdf/2409.02908).

Introduction

In this section, we provide a brief and intuitive overview of both continuous and discrete score-based diffusion models. We recommend referring to Yang Song’s blog and Aaron Lou’s blog for more in-depth explanations.

Likelihood-based probabilistic generative models

Training $p_\theta$ faces two major challenges:

- $p_\theta$ must be normalized over all states, i.e., $\int_{\mathcal X}p_\theta(x)\mathrm d x=1$ (for continuous data) or $\sum_{x\in\mathcal X} p_\theta(x)=1$ (for discrete data). The former involves an intractable integral, and the latter involves a total number $|\mathcal V|^d$ of states exponential to the data dimension.

- High-dimensional data distributions are typically sparse and hard to fit (curse of dimensionality).

Score-based diffusion models

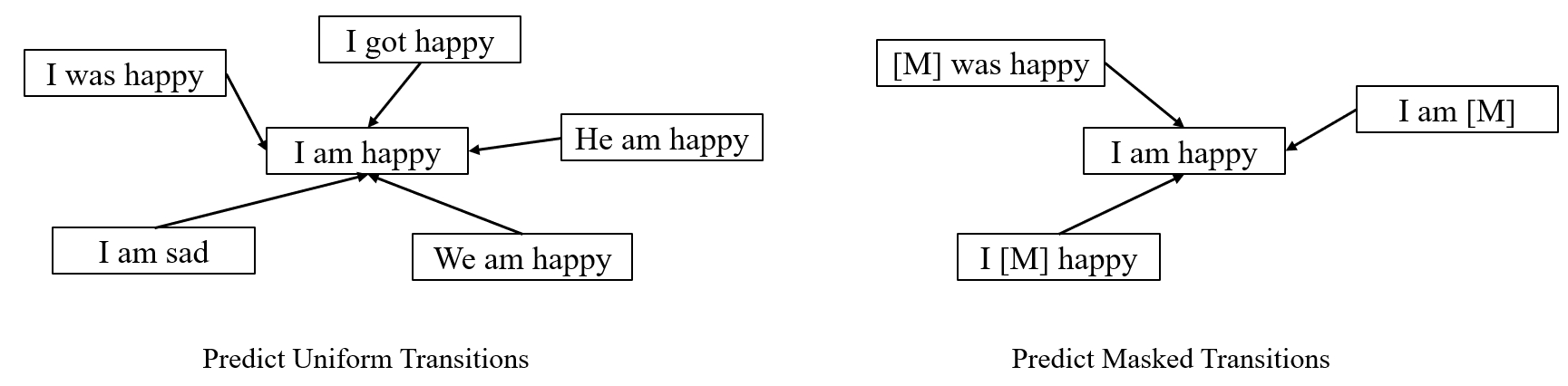

Discrete diffusion models can be defined in a similar score-based continuous-time approach[M].

In contrast to the continuous case, the score function $\nabla_x\log p_t(x)$ is not applicable as there is no proper gradient in the discrete space. Instead, the model can learn the probability ratio $\frac{p_t(y)}{p_t(x)}$ between different tokens $x$ and $y$, which is known as concrete score

In the multi-dimensional case ($d>1$), the number of possible states $|\mathcal V|^d$ grows exponentially with the data dimension (e.g., $50527^{1024}$ for sequences of length 1024 using GPT-2 tokenizer), and it is computationally intractable to model transitions between two arbitrary states. Instead, the model only predicts probabilities of single-token change. Besides, both the forward and reverse processes are factorized across dimensions, where all dimensions undergo transitions simultaneously and independently (except that the network is conditional on all dimensions).

Masked Diffusion Models as the Best-Performing Discrete Diffusion

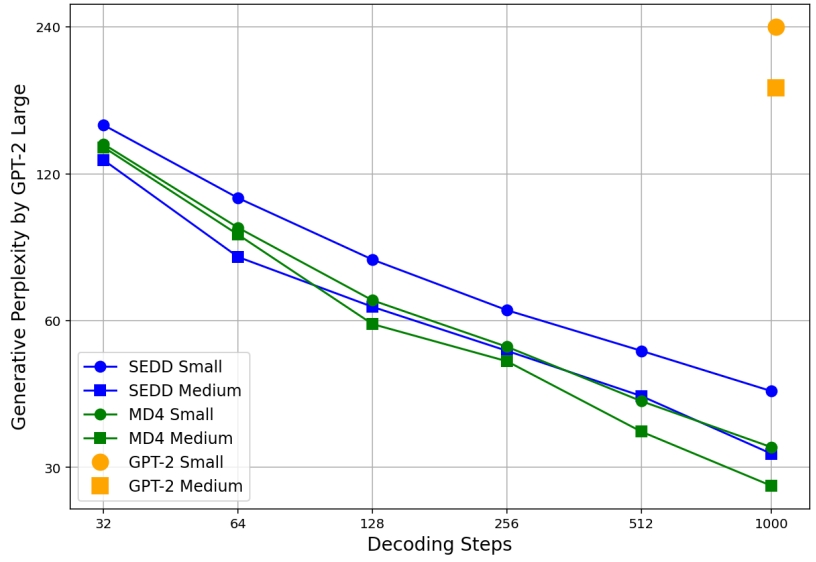

Empirically, the absorbing (or masked) variant demonstrates superior performance over other discrete diffusion schedules such as uniform, and is referred to as masked diffusion models (MDMs). This can be attributed to the simple masked mechanism: transitions are sparse and only happen once between data tokens and the mask token [M] in the whole generation process, which are relatively easier to predict. In some recent works

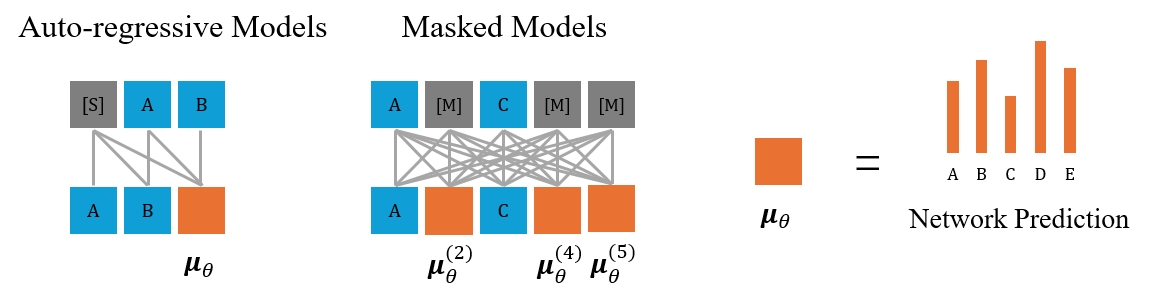

Under mean-parameterization, MDMs become quite similar to typical masked models

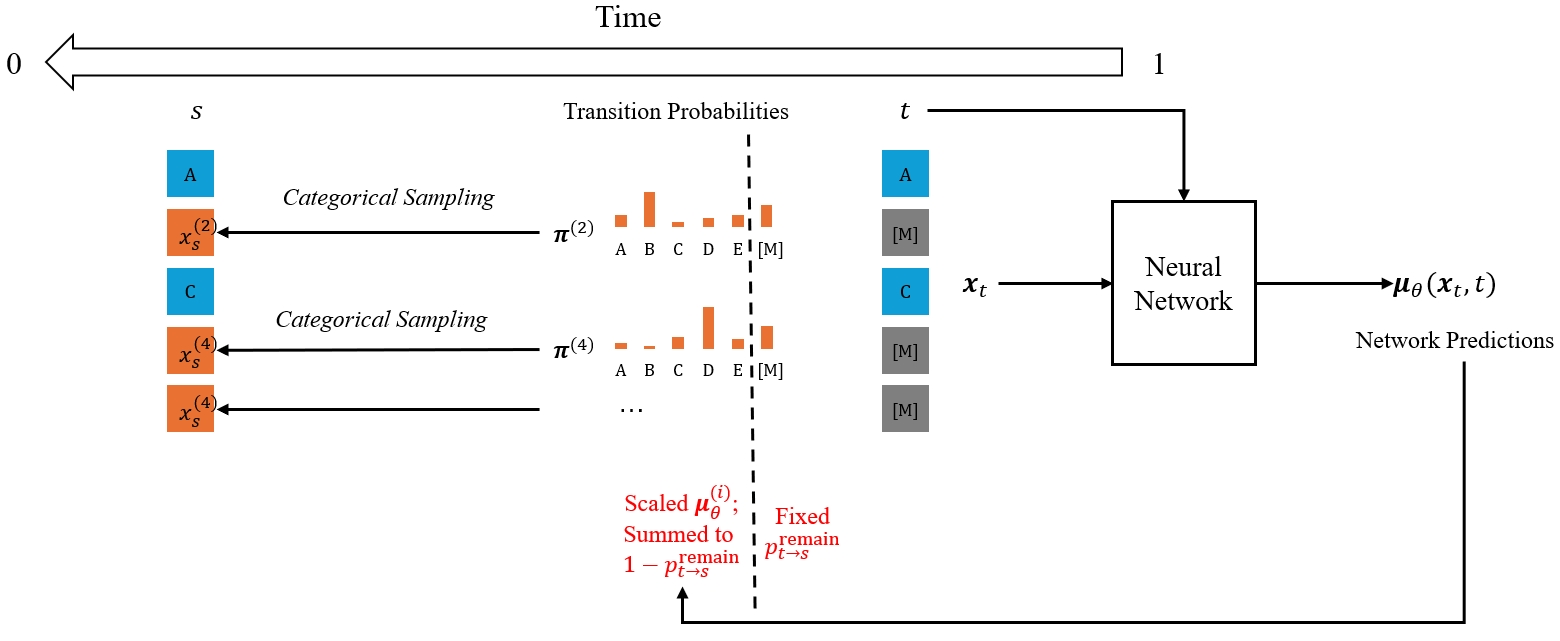

Specifically, let $\mathbf x_t=x_t^{(1)}x_t^{(2)}\cdots x_t^{(d)}$ represent the sequence at time $t$. For each position $i$ satisfying $x_t^{(i)}=\text{[M]}$, the transition from time $t$ to time $s<t$ is performed by sampling $x_s^{(i)}\sim\text{Cat}(\pi^{(i)})$, where $\text{Cat}$ denotes the categorical distribution and $\mathbf{\pi}^{(i)}=p_{t\rightarrow s}^{\text{remain}}\mathbf e_{\text{[M]}}+(1-p_{t\rightarrow s}^{\text{remain}})\mu_\theta^{(i)}$. Here, $\mathbf e_{\text{[M]}}$ denotes the one-hot vector for the mask token, and the remaining probability $p_{t\rightarrow s}^{\text{remain}}$ is independent of the network output $\mu_\theta$. In each sampling step of MDMs, whether a masked token will be unmasked is determined by rolling a dice (i.e., categorical sampling), which is distinguished from the token-by-token decoding process of masked models. The number of sampling steps in MDMs can be larger than the sequence length $d$, and a single sampling step can result in no token changes.

Does Lower Generative Perplexity Indicate Better Quality?

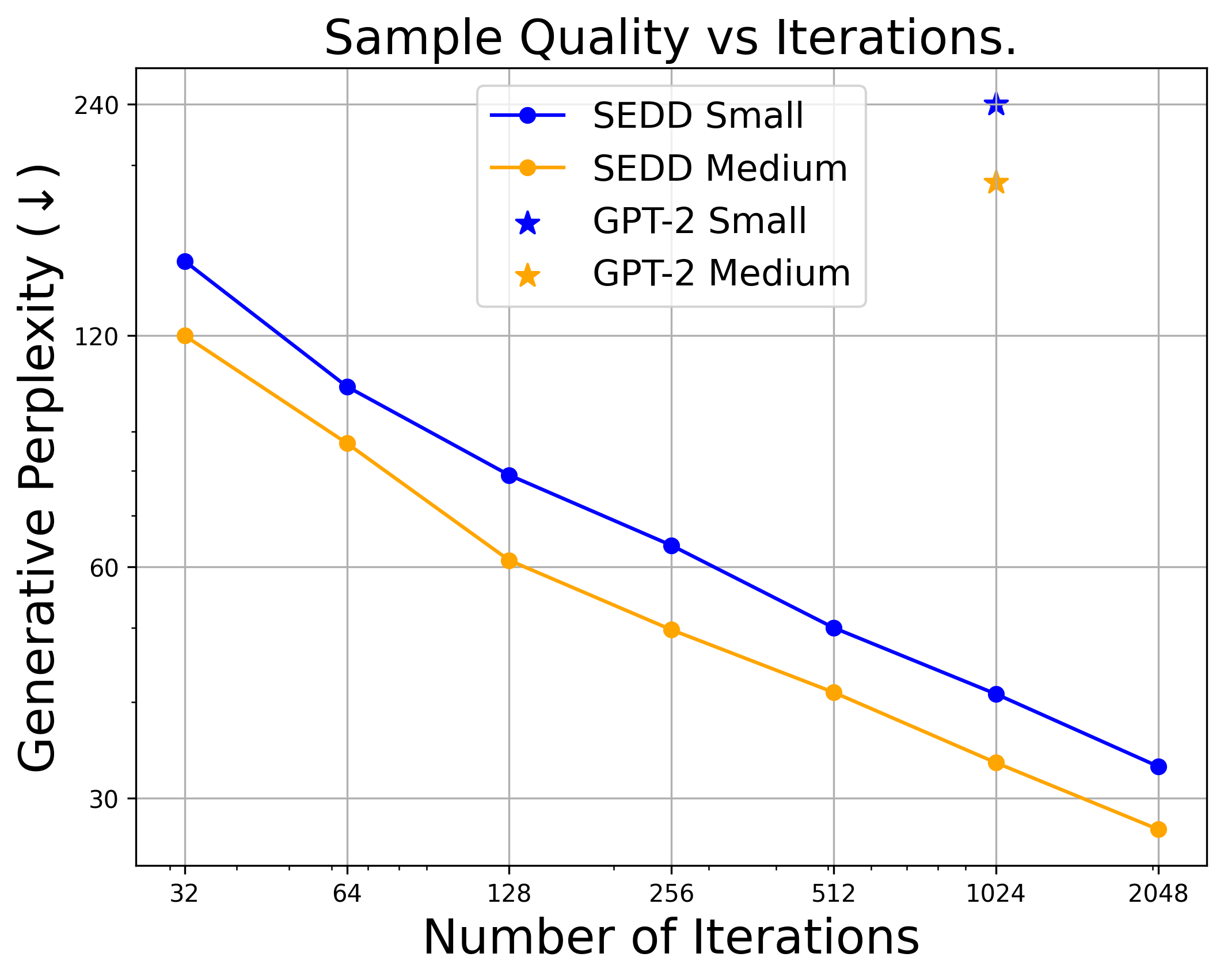

Generative perplexity (Gen PPL) is the main metric in previous works to evaluate the generation quality. Specifically, it measures the likelihood of generated text under some off-the-shelf model (typically GPT-2 Large). Lower Gen PPL means larger probability of the generated sample.

As suggested by multiple previous works

However, we argue that Gen PPL is not comprehensive for evaluating the generation quality of text. Unlike the Fréchet inception distance (FID) metric for images, which compares the whole distribution of generated images with that of real images, Gen PPL only favors high-probability samples, while neglecting other features like diversity and mode coverage.

Token Diversity Matters

We present two samples from ARMs and MDMs to demonstrate the diversity problem.

<|endoftext|> the new cars are crossovers.

AT&T Insurance Marketing Manager, Megan Maxwell, tells us that Model X was "reasonably priced, effective and inspires strong sentiment among consumers." She says:

Our GM car for discussion is shown as part of our drive 20 percent around the world and even a competitor. Our GM for discussion alt shows as one of our most popular cars in the world. We are in multiple countries introducing firmware for our new vehicles. While we are confident in our prices, we rely upon GM Auto’s sales data and know we must adapt this process to meet the needs of all customers.

The proposed pricing is similar to that of the cheaper Range Rover and other cheaper sport utility vehicles, which are primarily offered through its dealerships. Alongside a Volt, Delphi XE8 includes a plug-in hybrid version called Volt Energy.

"Dynamic pricing is our way to deliver owners of more attractive or more reasonable outcomes or to find more marketable models that appeal to them more than their competitors," notes Maxwell.

Earlier this week, GM analyst Greg Clifford predicted that Intel Global Radical Charge Power Savings (STB) would start at $3,300 over the product lifecycle with an adoption rate of 50 percent by 2025.<|endoftext|>

<|endoftext|> I was striking out, he got it to the third out. I know it wasn’t really a catch there, but he didn’t consider that at all. I knew what it wasn’t about. We scored by one run, so it was a difficult thing for me to go to a “get-out,” but being on the board is not an easy fact. I knew it was in play, and went to the third out that it needed to be thrown to. I had nothing to say about it, and it was the only opportunity for me to get, to make an appeal that might not come back, and it was the only time he saw that at that moment.

And so we continued.

“So we were sitting in the plus-five-and-plus-three-at-night, because we were up on an inning. We were in pinch-hit early in the ninth. I looked at a guy and was very nervous and confused, so when I looked at this man — I can’t remember what he was doing, but to double or dead-se the bases for sure — I said you’ll be the first one up, then I’m out of the ballpark. I felt like something had to be wrong with me, and he turned to me and told me, “that’s as bad as the rest,” just like that — “I bet!” I said calmly. “I bet,’” “Gotta do your best! Do not bet!’” “And when that happens, do you think I can be won by a one or two runs?” He stood there and looked stunned. “You mean that?” “Yes, absolutely.” “‘Yeah, absolutely.” “Well, is that a message to you?’“” And he looked back to me and said, “So we aren’t going out?” “Well, yes,” said me, “but I believe so I believe.”” “I bet,” “Yeah, I bet, but when we’re on the board, how much time are we gonna lose?” “Absolutely not,” “I bet,” “all right. I’m not going out. It’s me, understand.” “I bet, I believe not.” “What happened?”” “It happened!” “Did you hear a clue?” “I said, “Oh, no! I-I-I heard that fifth-dinger! Give me the clue!” The players, myself, and the “Man, Man, Man, it’s just beyond hell!” murmurs of the players. At the same time, I said, myself, “Young man, I’ve got to say — I won’t screw you right here.” I went on, “You can take it. You’re not going to lose.”” I smiled. “You know what you got to admit to yourself? This happened in baseball. I didn’t screw you in baseball, it don’t matter, I’ll screw you in a way.” He took the fifth-dinger and said, “It’s the end in baseball, it’s the end.” “That’s correct!” I said. “Yes, you can’t win in baseball,” I said. “But you’re not winning in baseball.” He turned to me. “No, really, it’s alright whether you’re winning or not.” — “I’m sure,” he said. “Good money!” — I cut off. “You’re not going to get this out. You do.” Those were a few words. As I were thinking, “What an enterprise.”

“What are you in baseball?”

“Ah, and it’s a game, not a story and a number. If you believe the most in-the-30s stories are about the when-they-had-to-be-done-as-cardinals-but-recan’t-they-get-in story?” I said “suck,” and “We did stop listening to the number, and we had to come off with the number.”

“Exactly,” said Mike. <|endoftext|>

With as many as 50,000 sample steps, MDMs can reach an extremely low Gen PPL. However, repetitive patterns such as “I bet!” and “I said” frequently appear, diminishing the diversity of tokens in the sequence.

To quantitatively assess the token diversity, we additionally measure the sentence entropy

Trade-Off between Generative Perplexity and Entropy

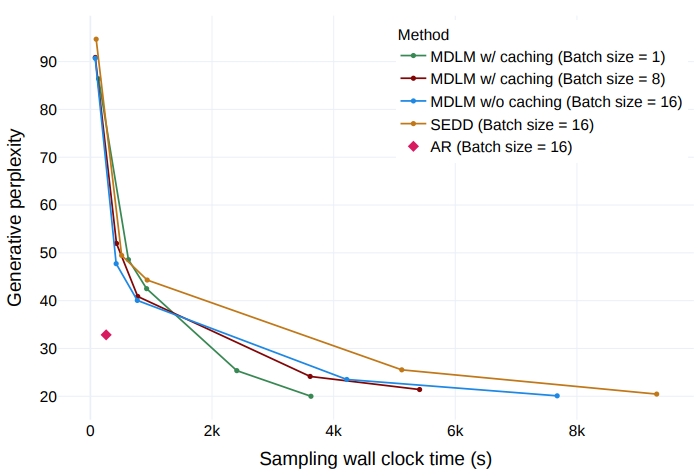

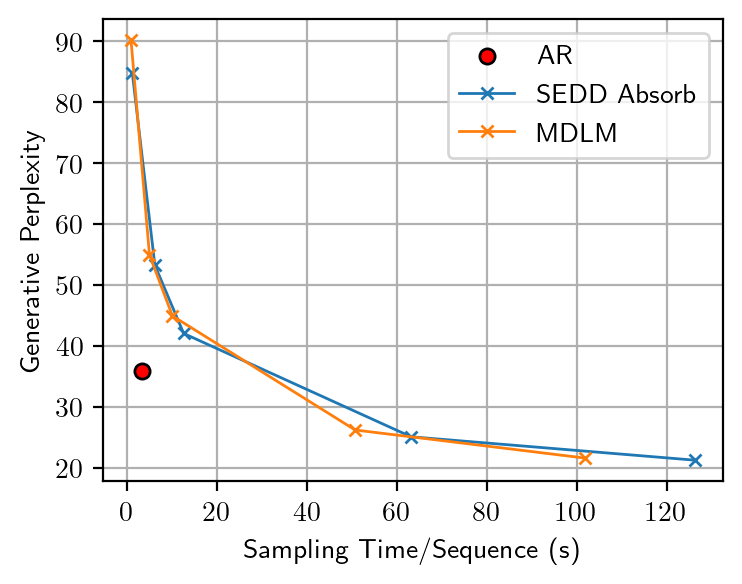

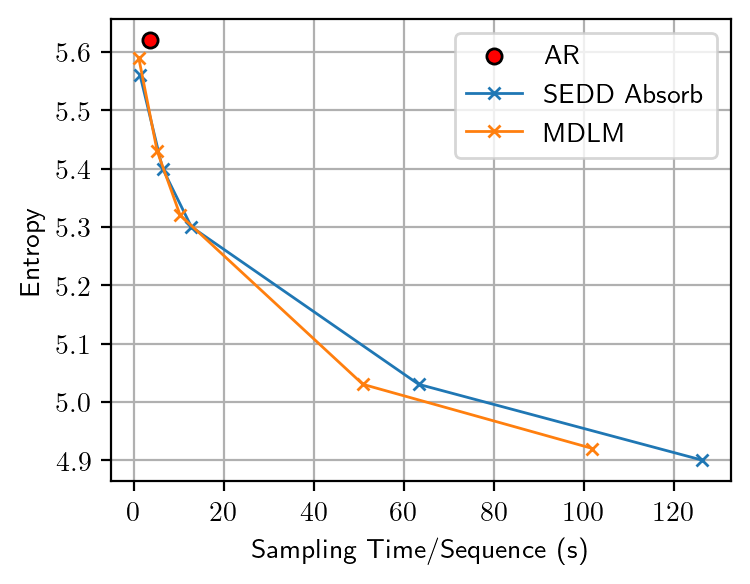

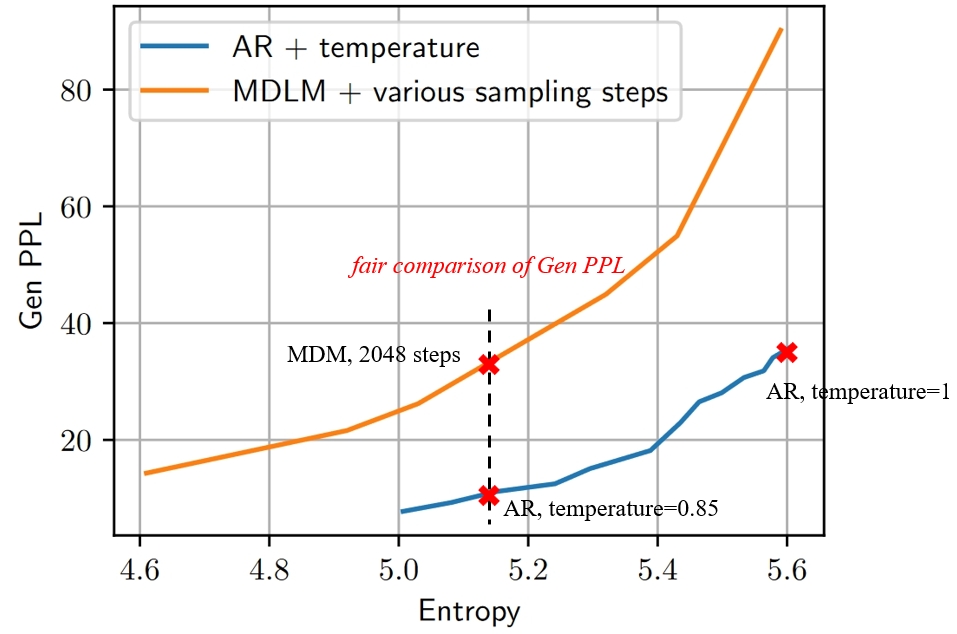

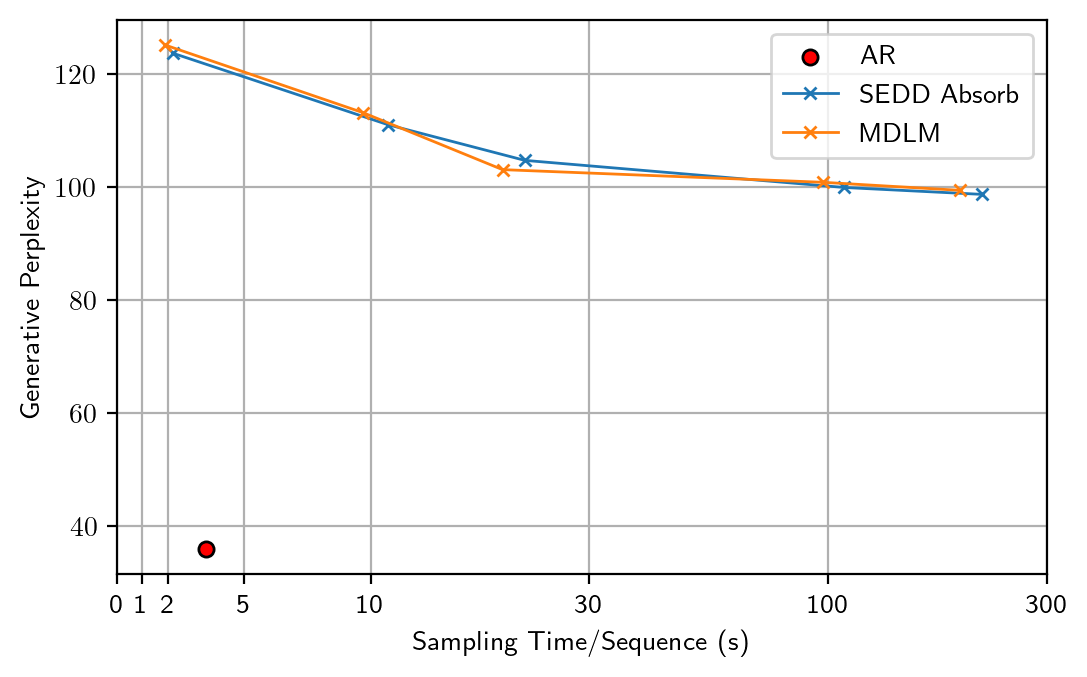

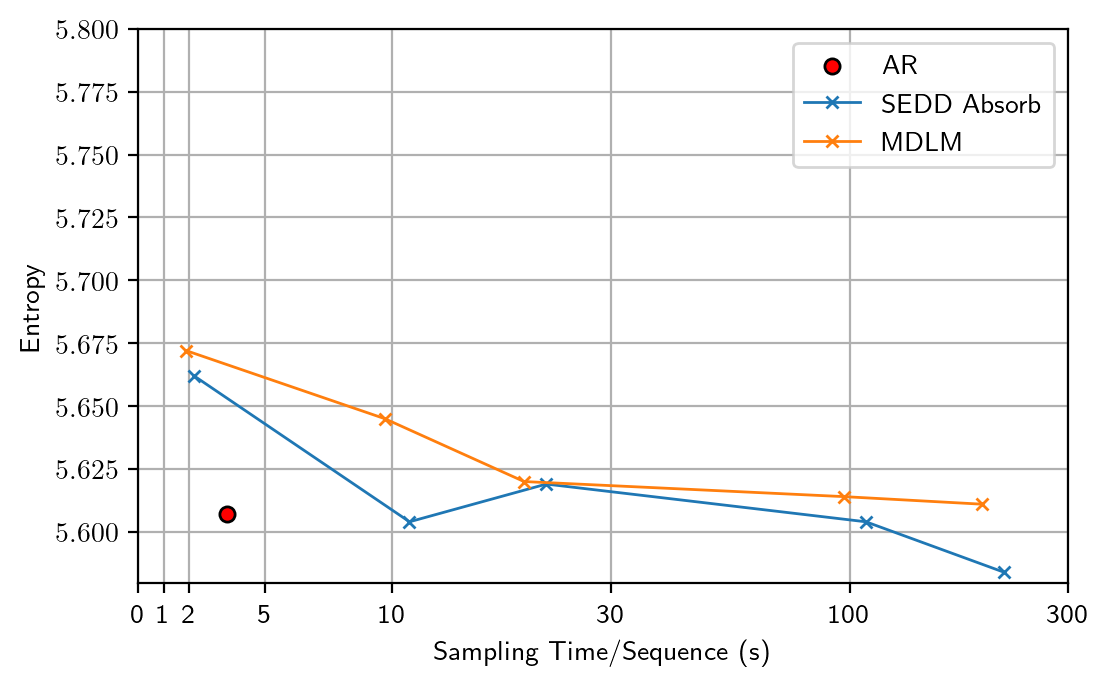

Our observations reveal that varying the number of sampling steps in MDMs creates an inherent trade-off between Gen PPL and entropy. This effectively changes the temperature, leading to an unfair comparison with ARMs, which are not subject to temperature scaling. After manually adjusting the temperature for ARMs to ensure a fair comparison at the same entropy level, we find that the Gen PPL of MDMs falls significantly behind.

What is the Root Cause of Reduced Diversity?

The reduced token diversity and low generation quality is unexpected. In theory, increasing the number of sampling steps should reduce discretization errors and more accurately reflect the true model performance, as already seen in continuous diffusion models. We therefore consider this an implementation issue and investigate further to identify the root cause.

Identifying the Numerical Precision Issue

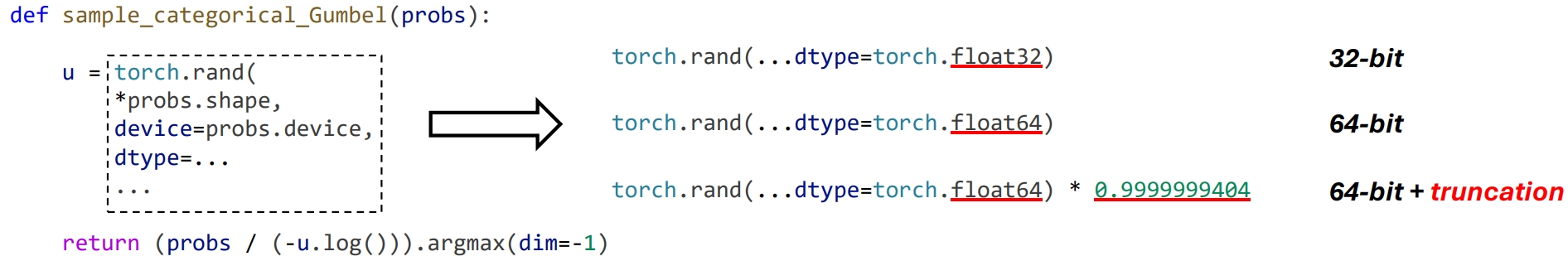

Surprisingly, we find that by simply altering the floating-point precision during sampling from 32-bit to 64-bit, the entropy returns to a normal level similar to ARMs (5.6~5.7), but with a generative perplexity $\approx100$. After careful ablations, we identify the root cause as the numerical inaccuracy in previous Gumbel-based categorical sampling.

Denote $\mathcal U(0,1)$ as the uniform distribution on $[0,1]$, and $\mathcal G(0,1)$ as the standard Gumbel distribution

The operation $g=-\log(-\log u)$ theoretically maps $u\in[0,1]$ to $g\in(-\infty,+\infty)$. But due to the limited representation ability of floating-point numbers in implementation, $u$ is constrained to $[0,1-\epsilon]$ and $g$ is constrained to $(-\infty,M]$ where $M=-\log(-\log (1-\epsilon))$

To verify that truncation is the fundamental issue, we conduct ablations by only modifying the categorical sampling code. We manually scale 64-bit uniform samples to match the truncation in the 32-bit case. We then randomly generate 8 samples with 2048 steps and compare the average Gen PPL and entropy.

| Version | Gen PPL | Entropy |

|---|---|---|

32-bit | 31.24 | 5.17 |

64-bit | 126.11 | 5.66 |

64-bit + truncation | 28.64 | 5.12 |

For both Gen PPL and entropy, 32-bit$\approx$64-bit + truncation, which confirms the impact of truncation.

Theoretical Explanations

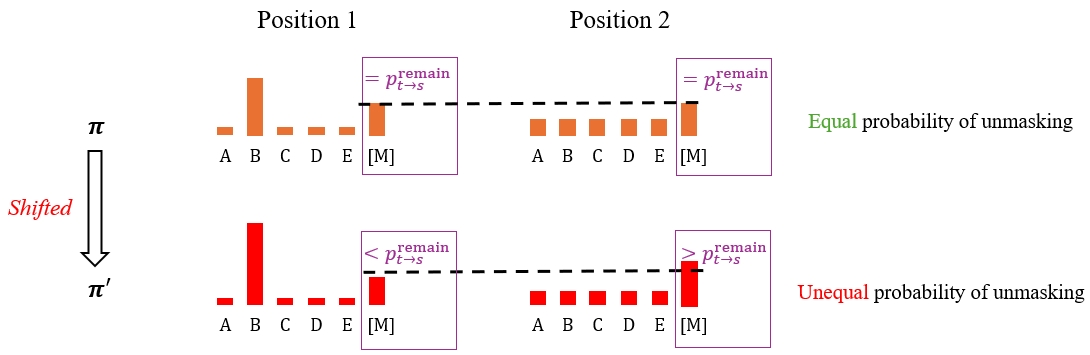

Through further derivation, we are surprised to find that the effect of truncation can be precisely described in closed-form. Specifically, suppose the original class probabilities are sorted as $\pi_1\leq\pi_2\leq\cdots\leq\pi_K$. With the truncated Gumbel distribution $\mathcal T\mathcal G(0,1,M)$, the resulting categorical samples instead follow shifted class probabilities $\pi '$:

\[\pi_n'=\pi_n\sum_{i=1}^{n}\beta(i),\quad \text{where } \beta(i)\geq 0\]To the best of knowledge, such formulations are revealed for the first time by us.

Click here to see the expression of $\beta(i)$

\[\beta(i)=\frac{e^{\left(K+1-i-\frac{\sum_{k=i}^K\pi_k}{\pi_i}\right) e^{-M}}-e^{\left(K+1-i-\frac{\sum_{k=i}^K\pi_k}{\pi_{i-1}}\right) e^{-M}}}{\sum_{k=i}^K\pi_k}\geq 0\]

This has two main implications:

- As $\beta(i)\geq 0$ and $\pi_n$ are sorted, if $\pi_{n_1}>\pi_{n_2}$, the adjusted class probabilities satisfy $\frac{\pi_{n_1}'}{\pi_{n_2}'}>\frac{\pi_{n_1}}{\pi_{n_2}}$. This indicates that relatively larger probabilities are further amplified. Therefore, for each position in the sequence, the resulting categorical distribution is biased towards classes with higher probabilities, creating an effect similar to lowering the temperature.

- As $\pi$ include the probability of remaining as

[M], different network outputs lead to varying shifting effects, resulting in unequal remaining probability for masked tokens at different positions. Therefore, some mask tokens are prioritized to be unmasked, thereby reducing randomness.

Both factors reduce the diversity and lower the entropy. However, we find that 32-bit categorical sampling produces similar results to 64-bit in token-by-token decoding procedures of ARMs and masked models, suggesting that the first factor is relatively insignificant. In contrast, the distinctive reverse-time sampling procedure of MDMs also suffers from prioritized unmasking. The temperature-lowering effects accumulate across numerous sampling steps, eventually leading to notable diversity issues, even under 32-bit floating-point precision.

Concluding Remarks

In this blog, we illustate how previous works on masked diffusion models secretely suffer from numerical precision issues, leading to somewhat unfair evaluations and doubtful claims. This blog is partially from our paper on arXiv, where we additionally prove that masked diffusion models are exactly equivalent to masked models in both training and sampling, except for some minor aspects like the loss weighting. Our investigation suggests:

- Generative perplexity alone cannot comprehensively reflect the text generation quality. In practice, it better to use the trade-off curve between generative perplexity and sentence entropy for a more holistic evaluation.

- Masked diffusion models are essentially performing maximum likelihood training of masked models. According to our practice, masked diffusion models, along with other discrete diffusion variants, are far from beating the auto-regressive paradigm in text generation.

Despite our negative findings, we acknowledge that the text-based experiments may inherently favor ARMs, as text naturally follows a left-to-right order that ARMs are better suited to model. Recent works on masked modeling of images